It was a lot – to be fair I may have gone overboard.

When Danny Sullivan (Google’s Search Liaison) reached out to me to see if I’d be interested in going to Google on October 28th and 29th – I immediately accepted.

The last 2 years have been exceptionally hard on TechRaptor, as I laid out in the introduction “speech” I gave at the start of the event.

There have been other Web Publisher/Creator Events in the past – and after doing this for 11 years, I was eager to contribute my voice to the conversation as well. After all, my main goal has been to contribute to the games industry in a meaningful way, and this was a chance to share my thoughts, opinions, and ideas directly to the Google Search Team.

Before the event, Danny and I had some back and forth over Twitter about how to effectively use this time, and attention, for the 20 of us going to pass along as much information as possible. He told us, in a followup e-mail, to send over what we have:

Some of you have examples you asked about sharing, either involving your site, or queries in general, or other types of feedback. We do want that. Ideally, put this into a Google Doc (or any text format of your choice) and share it with me. I’ll make sure it’s available to all the Googlers involved with Google Search. You can refer to such information in your remarks, if you like. If you prefer to do slides, or a video, or whatever, that’s also fine. Some of you have done blog posts, and those will also be shared if you provide links (I know many of those already, of course). We’ll make all of this available. – Danny Sullivan

Personally, I’m a “write it all down” type of person, so I went the Google Doc route.

In total, I sent over 20 pages of details to Google, plus an additional 6 sourced from other site owner(s) I talk with frequently.

I started the document with my speech, and then detailed out the history of TechRaptor’s drops.

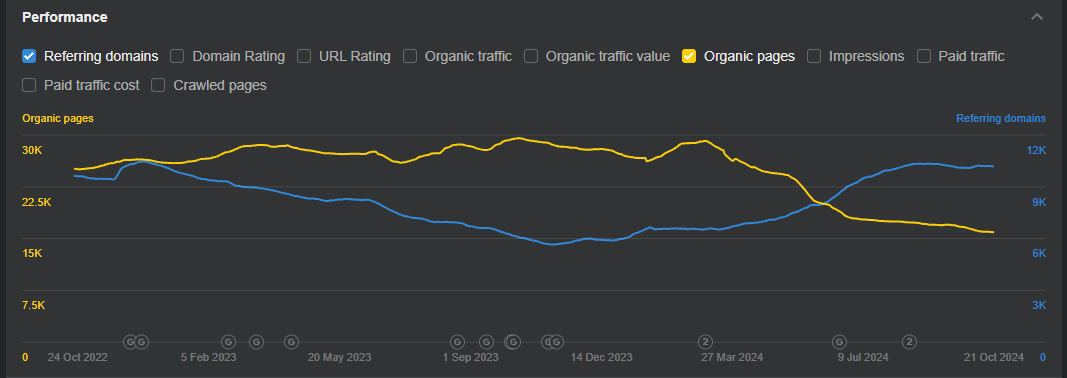

- September, 2022 – Product Reviews Update – 50% Drop in Visibility (SISTRIX)

- By far the most brutal drop we ever received.

- October 2022 – Spam Update – 10% Drop in Visibility

- December 2022 – HCU Update – No Change

- December 2022 – Link Spam Update – No Change

- February 2023 – Product Reviews Update – 5% Drop

- March 2023 – Core Update – 5% Drop

- April 2023 – Product Reviews Update – No Change

- June 19, 2023 – No Update – 200% Increase

- August 25, 2025 – Core Update – 5% Increase

- September 19, 2023 – HCU – No Change

- October 11, 2023 – Spam Update – Start of a new Gradual Decline

- November – Core/Reviews Update – Continued Decline

- March 2024 – Core Update – Slight Improvement

- May 2024 – Site Reputation Abuse Update – 50% Drop

- Danny told us that there was no algorithmic Site Reputation change that rolled out on May 6th, but our visibility dropped 50% after that day. We did not get a manual action.

- Please explain this.

- June 2024 – Spam Update – No Change

- August 2024 – Core Update – No change until the last day, then another 40% loss.

Once that got detailed out, I had two sections I won’t be sharing online as they contain some deep details about TechRaptor:

- History of Development Changes made since 2021

- I will note that I’ve invested well over $100,000 in development to improve our website over the last 4 years, with UX as the primary focus.

- A massive list of questions that I had sent to Amsive during our Audit in 2024.

I shared some links as well, both on and off TechRaptor, highlighting search concerns and interviews I’d done about TechRaptor too.

Then we get into the “meat of it” – my thoughts on specific concerns, and some potential solutions that could be explored.

Reddit is a Poor Source – Consistent #1 rankings don’t make good SERPs

It’s incredibly frustrating to do a search to see where one of your guides, or something else is showing, only to find a #1 Google Result of Reddit.

Usually, these posts are mediocre at best, 2-3 sentences with all sorts of stuff in the comments.

Sometimes those comments are full of SEO’s gaming the systems, sometimes they’re useful – but the core issue is that just because it’s on Reddit, doesn’t mean it’s “Helpful”

Consistently, I come across Reddit Threads lifting content from sites that created it, or more frequently now – Reddit Posts that have been deleted and scrubbed sitting at #1.

There’s plenty of subreddits where the mods are paid to promote certain content – how is that any different than Site Reputation Abuse?

In addition, we’re now seeing Reddit Posts sourced in Discover instead of the ACTUAL ARTICLE that it’s linking to. Similar to Syndicated Content/Yahoo, this is not what should be getting surfaced as the “source” for an article.

This is a problem – syndicated content is one thing, the publisher is likely getting kickbacks from MSN or Yahoo. On Reddit, we get near-nothing, a fraction of the traffic we should receive – and low quality traffic at that.

I can understand that maybe Reddit is easier to surface because they moderate, and Google doesn’t have to – but that’s a flawed system.

Google’s strength for the longest time has been its ability to surface, moderate, and filter a massive web to show users content.

Right now, it feels like it’s leaving that sorting and ranking up to paid deals and 3rd party moderation.

I do have faith that Google can get it right – a big part of doing that would be rolling back HCU Modifiers and not over-surfacing Reddit. I’d ask for less Ads and AI too, but that’s an unrealistic wish with Google’s focus on both of those.

Spam is out of Control in the SERPs and Google News

Consistently, I feel like I’m fighting spam. Between getting Google News Alerts with examples like the below daily, I also have HUNDREDS of websites copying my entire site and not only generating dofollow’ed links, but profiting off my team’s hard work.

I use the free versions of SEMRush and AHrefs mainly to keep an eye on links, and update the disavow as-needed with new sites generating 10’s-100’s of thousands of new spam links weekly.

I know that we’ve been told “don’t disavow” but when I look at our graph of new referring domains vs. traffic, it feels pretty suspicious, right?

When I do certain searches for articles we’ve written, or even just our name, tons of spam results. The fact that they rank at all is what worries me, that’s MY content.

It’s rare they actually outrank us, but that doesn’t mean it should be there. I’ll outline some ideas below on how maybe we get around this below.

Spam will always exist in some fashion, and AI has accelerated this – especially with Google actively touting AI writing tools in its own products.

Potential Google Search Solutions for Long-Term Publisher Health

Verify Websites

This will take some money, effort, and people – but I have no doubt that Google could afford to make the investment into something like this.

Look at what’s happened to the value of the Blue Check at X, it’s lost any meaning by being used to promote slop in exchange for additional views. That’s very much NOT what I’m proposing.

What I am proposing is that Google develop a system that verifies independent publishers, such as TechRaptor and the others here, for a few criteria:

- Size – have they reached a sufficient size to be assessed?

- Measure this in Impressions or Clicks

- Editorial Standards

- Do they have written policies that clearly define their Ethics and/or Review Scoring Policies?

- Structure

- Is the structure of the site sufficient? I.e. is it decently-well designed for User Experience?

- Ad Experience

- Make this mean something – in the past Interstitials were a no-no, now I see sites outranking us for these.

- Look for sites that do their best, but aren’t perfect, in displaying their ads.

- Bonus Point: Maybe be more clear in what is a bad ad experience? Every site, network, and even AdSense AutoAds themselves interpret things differently.

- Have a touchpoint

- In order to be verified, the site will need to meet with someone from Google. Explain who they are, their vision for their site, and how they create content.

Should a site sufficiently meet criteria, they become “verified” in GSC. This doesn’t display anything outwardly, but in doing so – Google’s algorithms could understand better when content has been lifted and re-published to reduce the spam problem.

I don’t think weighting these sites higher solves anything, but maybe it helps us understand things a bit better, and makes us feel engaged with Google and that we’re on the right track.

Crack Down Harder on Scrapers & AI

I can’t really say much more than, it’s immensely frustrating to see so much of this in Discover & Search.

Before 2022, this wasn’t this bad – it’s just plain nasty now. I’m not smart enough tech-wise to figure this out, but I’m sure it’s contributing.

Give us Better Advice

I think one of the most frustrating things for me is not understanding what we’ve done wrong.

Is our structure bad? Did we over-tag things? Bad Schema? Missing Schema that should be there? Were we hit for affiliate links? What do you consider affiliate links? What’s wrong with our reviews?

I think that’s one thing Google could really do to improve the ecosystem. You can give advice in GSC without giving away all the secrets.

Heck, even a “wow this content is just not great” section would rule. I’d invest in fixing it, for SURE. I hate having poor content on the site, and I ruthlessly cut it in the past.

Corporate Media Wins Too Much, and that’s Bad

It’s way too easy for Corporate media sites to win. They can’t put out a crazy amount of content without penalization, and beat out sites that are focused in that area without much effort.

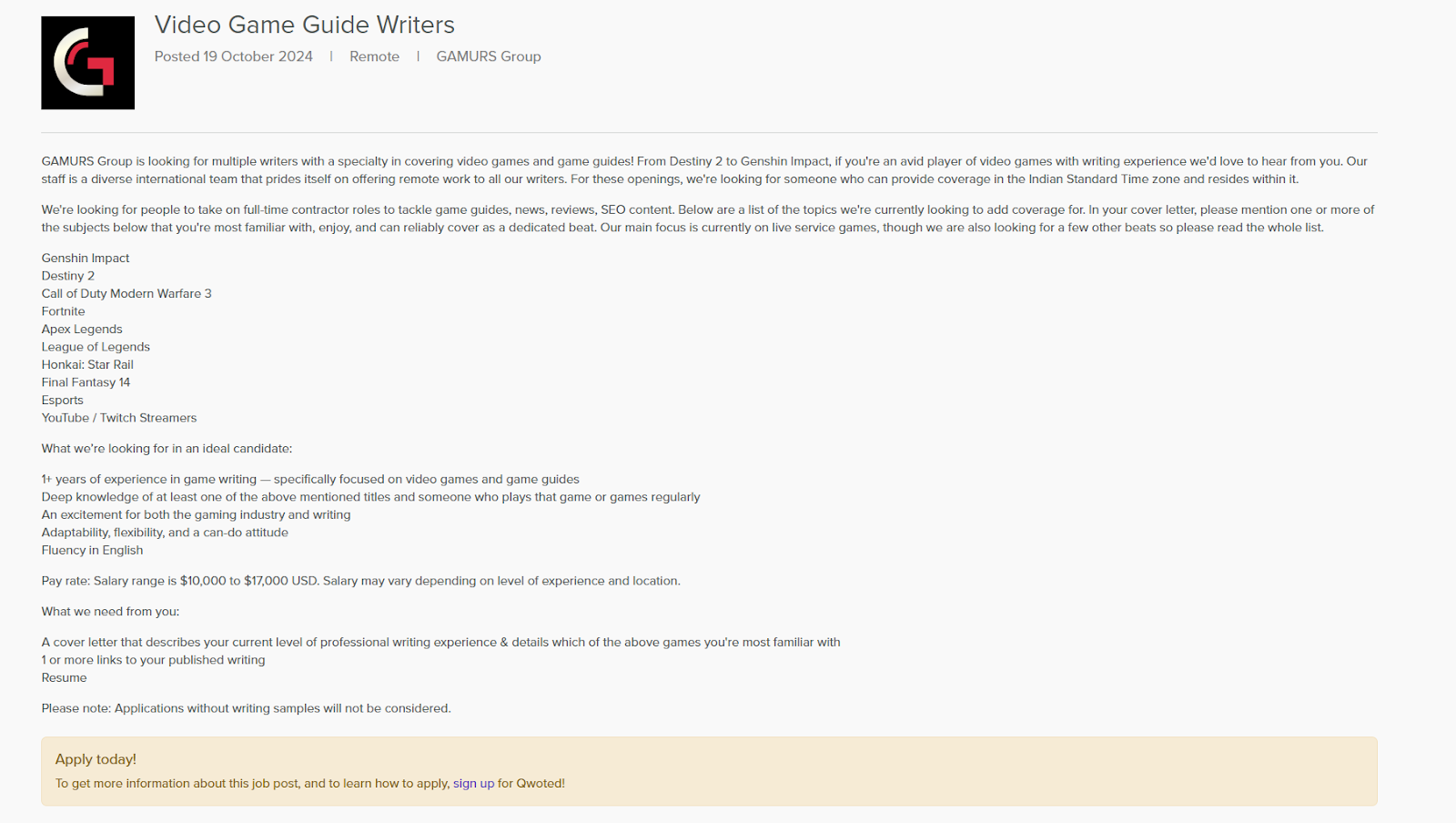

I mean, look at what one of the largest Games Media orgs is paying people for FULL TIME (10-17k/yr). This is disgusting. TechRaptor pays 3-4x this for our lowest paying roles, even while at 1/10th of the traffic of Gamurs websites.

These sites, like Valnet and Gamurs Properties, generally win in Discover with Clickbait and misleading titles, and yet TechRaptor using standard and descriptive titles gets ZERO Discover Traffic.

There’s an unfair playing field here – they have more money but they pay people far less, burn out writers consistently, and yet still snatch up sites and do mass layoffs.

Independent Media, like TechRaptor and the others at this event, suffer while they’re allowed to continue to exploit people for profit.

Valnet can use its 200+ posts per day, per site, to create a massive web of interlinking with ease, something that most of us with a small team that works hard couldn’t hope to compete with.

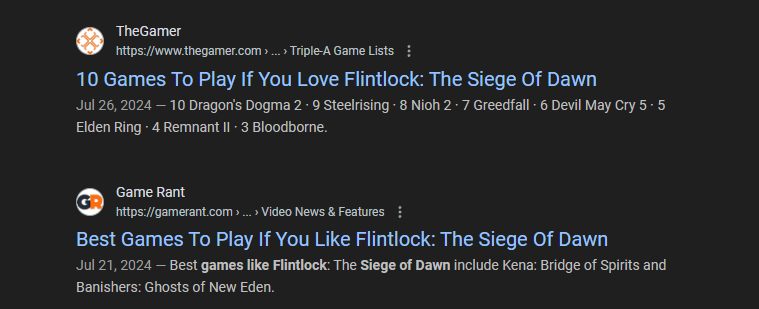

What we’ve seen is that these HUGE media sites like Forbes and Corporate-owned media, have been able to blow up the umbrella of what they cover and win with ease.

There has to be a way to equalize this – these days I see Fandom ranking at the top of everything just because it’s a wiki. That doesn’t mean it’s good – my team has more complete guides, with every possible detail necessary within them, but a Fandom page with ¼ of the information ranks higher? Just because it’s Fandom?

TechRaptor used to consistently rank top 5 in the SERPs for our detailed guides, now many of them are completely gone from the first page of results. There’s no real indication as to why this is, other than hits from the Product Review Updates (which shouldn’t impact guides?) and HCU’s.

How can we, as Publishers, be able to continue to run and pay our people, if we can’t compete just because some site has a higher “authority” because they have more money to spend on churning out content?

Remove the “Shadowban”

There was a clear “Shadowban” of sorts that the HCU imposed on sites, and by proxy some of the Review Updates too, like the one that hit us in September of 2022.

These shadowbans throttle traffic site-wide, but we have no indication of what we did wrong, or how to recover. It’s infuriating to have a blanket suppression algorithm that we have no clear guidance on how to get rid of.

Yes, Google has some documentation but it’s not “Helpful Content” in our eyes. It’s basically just “Write Good Content, and hope for the best next update.”

I highly doubt that’s how Google runs itself as a business, hoping that things work out for the best – after all the Google Graveyard is massive.

If the plan is to keep the HCU/Product Reviews suppression in place, at least help us understand what the signals that cause it are – I have a VERY hard time believing that

Meet With Us Directly

I think what’s missing with Search, is building relationships with creators and publishers. It should be a symbiotic relationship, but with the AI direction Search has taken, it now feels somewhat parasitic.

Meet with us more. Create a Publisher Success team that meets with, and provides advice to, Publishers who have a proven track record, and are investing in their UX and Content.

Help us make the improvements Google wants to see, but also work with us to minimize the harm that search changes are doing to us, our teams, and our industries.

Be good, do no evil.

I’m hopeful the event tomorrow will be a productive one, that not only will Google listen to our thoughts, concerns, and ideas – but that they’ll work with us (and other web publishers) to help us get back to creating jobs (and content.)

There is no NDA or guidelines for this event – we’re all welcome to share pictures, thoughts, and discussions publicly. I plan to do so post-event, on the long plane ride home. Follow me on BlueSky to see when that goes up, but in the meantime you can read the “speech” I’m giving to introduce myself at the start of the event.

Leave a comment